Tech

Meta researchers build an AI that learns equally well from visual, written or spoken materials – TechCrunch

Advances in the AI realm are constantly coming out, but they tend to be limited to a single domain: For instance, a cool new method for producing synthetic speech isn’t also a way to recognize expressions on human faces. Meta (AKA Facebook) researchers are working on something a little more versatile: an AI that can learn capably on its own whether it does so in spoken, written or visual materials.

The traditional way of training an AI model to correctly interpret something is to give it lots and lots (like millions) of labeled examples. A picture of a cat with the cat part labeled, a conversation with the speakers and words transcribed, etc. But that approach is no longer in vogue as researchers found that it was no longer feasible to manually create databases of the sizes needed to train next-gen AIs. Who wants to label 50 million cat pictures? Okay, a few people probably — but who wants to label 50 million pictures of common fruits and vegetables?

Currently some of the most promising AI systems are what are called self-supervised: models that can work from large quantities of unlabeled data, like books or video of people interacting, and build their own structured understanding of what the rules are of the system. For instance, by reading a thousand books it will learn the relative positions of words and ideas about grammatical structure without anyone telling it what objects or articles or commas are — it got it by drawing inferences from lots of examples.

This feels intuitively more like how people learn, which is part of why researchers like it. But the models still tend to be single-modal, and all the work you do to set up a semi-supervised learning system for speech recognition won’t apply at all to image analysis — they’re simply too different. That’s where Facebook/Meta’s latest research, the catchily named data2vec, comes in.

The idea for data2vec was to build an AI framework that would learn in a more abstract way, meaning that starting from scratch, you could give it books to read or images to scan or speech to sound out, and after a bit of training it would learn any of those things. It’s a bit like starting with a single seed, but depending on what plant food you give it, it grows into an daffodil, pansy or tulip.

Testing data2vec after letting it train on various data corpi showed that it was competitive with and even outperformed similarly sized dedicated models for that modality. (That is to say, if the models are all limited to being 100 megabytes, data2vec did better — specialized models would probably still outperform it as they grow.)

“The core idea of this approach is to learn more generally: AI should be able to learn to do many different tasks, including those that are entirely unfamiliar,” wrote the team in a blog post. “We also hope data2vec will bring us closer to a world where computers need very little labeled data in order to accomplish tasks.”

“People experience the world through a combination of sight, sound and words, and systems like this could one day understand the world the way we do,” commented CEO Mark Zuckerberg on the research.

This is still early stage research, so don’t expect the fabled “general AI” to emerge all of a sudden — but having an AI that has a generalized learning structure that works with a variety of domains and data types seems like a better, more elegant solution than the fragmented set of micro-intelligences we get by with today.

The code for data2vec is open source; it and some pretrained models are available here.

Tech

Model doesn't feel safe wearing designer clothes in Canada's biggest city | Canada – Daily Hive

A model says she feels like a “sitting duck” wearing designer clothes in downtown Toronto amid a general state of unease in the city in response to an uptick in violent crimes.

Hanya Kizemchuk posted a video on Instagram and TikTok where the local model claimed that she sprinted two blocks to her car after a recent modelling shoot in Toronto after being overcome with the sense that her expensive attire read as “a stop sign screaming ‘rob me.’”

In the video, Kizemchuk describes the scene on a cold, rainy night after finishing a shoot, explaining, “I wrapped my head in my Louis Vuitton wrap. I had my Louis Vuitton duffle bag with all my shoes and makeup and whatever I need for that job. I was wearing my Gucci crossover and I was wearing my black leather Burberry coat.”

“And as I jumped out onto the street, I have to say that I realized for the first time ever in the city of downtown Toronto, I was truly like a sitting duck and that this is no longer okay to be running around like this, that I need to be a little more downplayed so that I don’t attract attention.”

Kizemchuk says she was “a little unnerved” and felt compelled to run “two blocks to my car and continuously check to see if anyone was popping out from somewhere because I was like a stop sign screaming, ‘Rob me.’”

“And that’s how I felt for the first time ever in this beautiful city of Toronto, which I grew up in and don’t recognize anymore.”

A few chimed in, sharing comments siding with Kizemchuk.

Unfortunately crime has increased everywhere. If you are on the street in downtown Toronto, and you are decked out head to toe in designer goods, you are calling attention to yourself and you would be lucky if you’re not mugged. Sadly, it is the same or worse in all big cities.

— john smith (@jsmith9999992) April 18, 2024

Others questioned why she would run away without identifying any specific threats and then make a post online about feeling unsafe.

So nothing happened? She just felt scared walking to her car and no one was around?

— Graeme 🦀 (@hexagraeme) April 18, 2024

One user pointed out how this video is another example of wealth inequality and the ever-growing divide between the rich and poor in Toronto.

lady with extreme wealth complains about wealth inequality

🤷♂️

lady, we’ve been screaming for years.

— Dave Jay (@DaveJayToronto) April 18, 2024

According to Toronto Police data, major crime indicators have spiked year-to-date in several categories during 2024, including assault (+10.9%) and robbery (+19.7%).

Tech

Forged by friendship, this year's Stampede boots pay tribute to Stoney Nakoda iconography – MSN

If not for Duane Mark and Lloyd Templeton’s budding friendship, this year’s Calgary Stampede boot design would have never existed.

While the boot was only constructed in recent months, the process began when Templeton, a Calgary-raised artist in his early 20s, approached Mark with a request to use images of the Stoney Nakoda teepee-holder and educator for artwork he was preparing for the Calgary Stampede.

The two clicked from the get-go. By November, after hours together, Templeton’s piece featuring Mark — dressed in full regalia standing in the foreground of the Calgary Tower among a diverse group of parade participants — was chosen as the 2024 Stampede poster artwork.

On Thursday, Templeton’s art was unveiled as the design for this year’s Stampede boot — now the second product of their friendship that’s been produced for this year’s 10-day rodeo and fair.

“What comes to mind is the growth of a young man named Lloyd,” Mark said, when asked what he sees in this year’s boot design.

The artwork on the exterior reflects key Stoney Nakoda First Nation and Treaty 7 iconography, Templeton said at Thursday’s unveiling. Stitchings of Alberta’s mountain range and the golden eagle flying through a rising sun — two important symbols for the First Nation’s culture — line the outside of the boot.

The boot’s interior has the words Oyadé Gichiyabi, Ahogichopabi Îyûhabith inscribed, which roughly translates in Stoney language to “be empowered to foster peace and respect,” which was selected at Mark’s recommendation.

A recent graduate from the Alberta University of the Arts, Templeton is becoming a household name in Calgary’s arts community at a pace that’s not lost on him.

“Just last year I was making school projects, and a year later, there’s going to be people wearing my art. That’s nuts,” he said.

Working in three dimensions was a new challenge for Templeton. To start, he would tape paper to the back of the boot to get a feel for the shapes he needed to produce. He then drew the designs by pencil, scanned them into his computer and produced it into a special file that allowed it to be etched by laser onto the boots.

“My poster was oil paint, a very traditional process,” he said. “I was kind of making it up on the go to see what worked. I liked the challenge of that.”

Margaret Holloway, the Stampede’s 2024 First Nations Princess who also provided input on the boot design, said she was “breathtaken, speechless” when she first saw the design. Breaking from tradition, this year’s design will be available on five different shades of boot. Alberta Boot normally creates one special boot for each Stampede.

The 22-year-old jingle dancer is the first person from Stoney Nakoda to be named First Nations Princess in more than 20 years.

Holloway’s family teepee at the Elbow River Camp has three large eagles on it, she said.

“Back home, we see the eagles fly and we feel blessed by their presence, and we feel amazed just by their beauty of soaring in the skies. To see that on this year’s Stampede boot was absolutely unbelievable.”

With their latest creation publicly revealed, Templeton and Mark’s friendship will extend far past their artistic collaboration.

“He’s the coolest dude. We have a lot in common — a good sense of humour, listen to the same music and movies. We make a lot of the same jokes,” Templeton said.

Mark said he’s watched the young artist grow and mature in front of his eyes. Over the past year they’ve discussed “deep Indigenous philosophy,” which Templeton has evidently absorbed into his own life, he said.

“We became the best of friends and will continue to be the best of friends,” Mark said.

X: @mattscace67

Tech

Huawei's new Kirin 9010 brings minor CPU improvements – GSMArena.com news – GSMArena.com

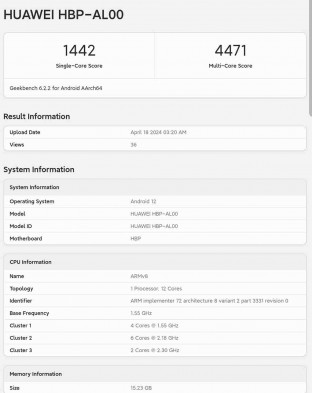

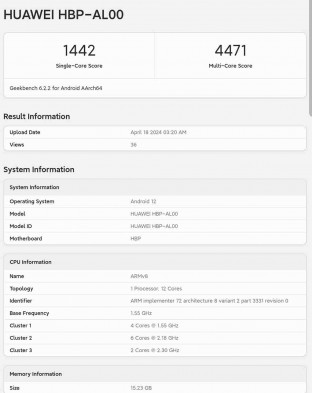

Huawei announced the Pura 70 series today, and once again offered no details regarding the chipsets. However, early benchrmarks confirmed they feature a new platform called Kirin 9010, which has an 8-core CPU, identified by apps as 12-core unit due to hyperthreading.

Hyperthreading is nothing new in the chipset industry, as the Taishan cores have been supporting the technology for some time; it has been part of the Kirin 9000s and now is a part of the 9010 as well.

First Geekbench results revealed a minor improvement in raw performance, coming from slightly faster core speeds. The numbers show improvement single digit percentage improvements in both single core and multi core tests.

Kirin 9010 vs Kirin 9000S on Geekbench

The actual octa-core combination of Kirin 9010 is as follows: one 2.30 GHz Taishan Big, three 2.18 GHz Taishan Mid and four 1.55 GHz Cortex-A510. The GPU remains Maleoon 910 at 750 MHz.

-

Investment14 hours ago

Investment14 hours agoUK Mulls New Curbs on Outbound Investment Over Security Risks – BNN Bloomberg

-

Sports12 hours ago

Sports12 hours agoAuston Matthews denied 70th goal as depleted Leafs lose last regular-season game – Toronto Sun

-

Tech13 hours ago

Tech13 hours agoSave $700 Off This 4K Projector at Amazon While You Still Can – CNET

-

Tech12 hours ago

Tech12 hours ago'Kingdom Come: Deliverance II' Revealed In Epic New Trailer And It Looks Incredible – Forbes

-

Science14 hours ago

Science14 hours agoJeremy Hansen – The Canadian Encyclopedia

-

Business11 hours ago

BC short-term rental rules take effect May 1 – CityNews Vancouver

-

Investment11 hours ago

Investment11 hours agoBenjamin Bergen: Why would anyone invest in Canada now? – National Post

-

Art11 hours ago

Collection of First Nations art stolen from Gordon Head home – Times Colonist