The federal government is ordering the dissolution of TikTok’s Canadian business after a national security review of the Chinese company behind the social media platform, but stopped short of ordering people to stay off the app.

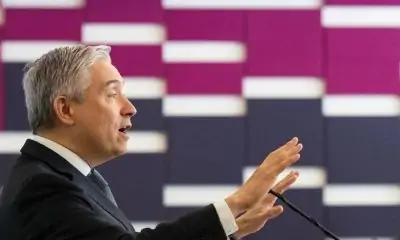

Industry Minister François-Philippe Champagne announced the government’s “wind up” demand Wednesday, saying it is meant to address “risks” related to ByteDance Ltd.’s establishment of TikTok Technology Canada Inc.

“The decision was based on the information and evidence collected over the course of the review and on the advice of Canada’s security and intelligence community and other government partners,” he said in a statement.

The announcement added that the government is not blocking Canadians’ access to the TikTok application or their ability to create content.

However, it urged people to “adopt good cybersecurity practices and assess the possible risks of using social media platforms and applications, including how their information is likely to be protected, managed, used and shared by foreign actors, as well as to be aware of which country’s laws apply.”

Champagne’s office did not immediately respond to a request for comment seeking details about what evidence led to the government’s dissolution demand, how long ByteDance has to comply and why the app is not being banned.

A TikTok spokesperson said in a statement that the shutdown of its Canadian offices will mean the loss of hundreds of well-paying local jobs.

“We will challenge this order in court,” the spokesperson said.

“The TikTok platform will remain available for creators to find an audience, explore new interests and for businesses to thrive.”

The federal Liberals ordered a national security review of TikTok in September 2023, but it was not public knowledge until The Canadian Press reported in March that it was investigating the company.

At the time, it said the review was based on the expansion of a business, which it said constituted the establishment of a new Canadian entity. It declined to provide any further details about what expansion it was reviewing.

A government database showed a notification of new business from TikTok in June 2023. It said Network Sense Ventures Ltd. in Toronto and Vancouver would engage in “marketing, advertising, and content/creator development activities in relation to the use of the TikTok app in Canada.”

Even before the review, ByteDance and TikTok were lightning rod for privacy and safety concerns because Chinese national security laws compel organizations in the country to assist with intelligence gathering.

Such concerns led the U.S. House of Representatives to pass a bill in March designed to ban TikTok unless its China-based owner sells its stake in the business.

Champagne’s office has maintained Canada’s review was not related to the U.S. bill, which has yet to pass.

Canada’s review was carried out through the Investment Canada Act, which allows the government to investigate any foreign investment with potential to might harm national security.

While cabinet can make investors sell parts of the business or shares, Champagne has said the act doesn’t allow him to disclose details of the review.

Wednesday’s dissolution order was made in accordance with the act.

The federal government banned TikTok from its mobile devices in February 2023 following the launch of an investigation into the company by federal and provincial privacy commissioners.

— With files from Anja Karadeglija in Ottawa

This report by The Canadian Press was first published Nov. 6, 2024.